Get started

Intellinode module provides various language models, including OpenAI's ChatGPT and Llama V2 model from Replicate or AWS SageMaker.

We will demonstrate the setup for OpenAI's ChatGPT, followed by the two methods of integrating the Llama model - through Replicate's API or AWS SageMaker dedicated deployment. All the models are available with the unified chatbot interface with a minimum code change when switching between models.

ChatGPT Model

- Import the necessary modules from IntelliNode. This will include the

Chatbot,ChatGPTInput, andChatGPTMessageclasses.

const { Chatbot, ChatGPTInput, ChatGPTMessage } = require('intellinode');

- To use OpenAI, you'll need a valid API key. Create a

Chatbotinstance, providing the API key and 'openai' as the provider.

const chatbot = new Chatbot(OPENAI_API_KEY, 'openai');

- Construct a chat input instance and add user messages:

const system = 'You are a helpful assistant.';

const input = new ChatGPTInput(system);

input.addUserMessage('Explain the plot of the Inception movie in one line.');

- Use the

chatbotinstance to send chat input:

const responses = await chatbot.chat(input);

responses.forEach(response => console.log('- ', response));

ChatGPT Streaming

To use the ChatGPT streaming with IntelliNodese, call chatbot.stream method to send the chat input and receive a stream of responses:

let response = '';

for await (const contentText of chatbot.stream(input)) {

response += contentText;

console.log('Received chunk:', contentText);

}

By using the chatbot.stream, you can receive a stream of responses from ChatGPT instead of waiting for the entire conversation to complete. The stream function supported for openai provider only.

Cohere Model

Initiate the chatbot with cohere coral model and web search capabilities.

- Import the necessary modules.

const { Chatbot, CohereInput, SupportedChatModels } = require('intellinode');

- Initiate the chatbot object with a valid api key from (cohere.com).

const bot = new Chatbot(process.env.COHERE_API_KEY, SupportedChatModels.COHERE);

- Prepare the input with cohere web search extension:

const input = new CohereInput('You are a helpful computer programming assistant.', {web: true});

input.addUserMessage('What is the difference between Python and Java?');

- Call the chatbot and parse the responses.

const responses = await bot.chat(input);

responses.forEach((response) => console.log('- ' + response));

Mistral AI

Mistral provide open source mixer of experts models.

- Import the

ChatbotandMistralInputmodules.

const { Chatbot, MistralInput, SupportedChatModels } = require('intellinode');

- Initiate the chatbot object with a valid api key from (mistral.ai).

const mistralBot = new Chatbot(apiKey, SupportedChatModels.MISTRAL);

- Prepare the input and select your preferred mistral model like

mistral-tinyormistral-medium.

const input = new MistralInput('You are an art expert.', {model: 'mistral-medium'});

input.addUserMessage('Who painted the Mona Lisa?');

- Call the chatbot and parse the responses.

const responses = await mistralBot.chat(input);

Anthropic

Anthropic provide models with large context window like claude 3.

- Import the

ChatbotandAnthropicInputmodules.

const { Chatbot, AnthropicInput, SupportedChatModels } = require('intellinode');

- Initiate the chatbot object with a valid api key from (console.anthropic.com).

const bot = new Chatbot(apiKey, SupportedChatModels.ANTHROPIC);

- Prepare the input and select your preferred claude model.

const input = new AnthropicInput('You are an art expert.', {model: 'claude-3-sonnet-20240229'});

input.addUserMessage('Who painted the Mona Lisa?');

- Call the chatbot and parse the responses.

const responses = await bot.chat(input);

Llama V2 Model

Integration with Llama V2 is attainable via two options, using:

- Replicate's API: simple integration.

- AWS SageMaker: hosted in your account for extra privacy and control (SageMaker steps).

Replicate's Llama Integration

- Import the necessary classes.

const { Chatbot, LLamaReplicateInput, SupportedChatModels } = require('intellinode');

- You'll need a valid API key. This time, it should be for replicate.com.

const chatbot = new Chatbot(REPLICATE_API_KEY, SupportedChatModels.REPLICATE);

- Create the chat input with

LLamaReplicateInput

const system = 'You are a helpful assistant.';

const input = new LLamaReplicateInput(system);

input.addUserMessage('Explain the plot of the Inception movie in one line.');

- Use the

chatbotinstance to send chat input:

const response = await chatbot.chat(input);

console.log('- ', response);

Advanced Settings

You can create the input with the desired model name:

// import the config loader

const {Config2} = require('intellinode');

// llama 13B model (default)

const input = new LLamaReplicateInput(system, {model: Config2.getInstance().getProperty('models.replicate.llama.13b')});

// llama 70B model

const input = new LLamaReplicateInput(system, {model: Config2.getInstance().getProperty('models.replicate.llama.70b')});

AWS SageMaker Integration

Integration with the Llama V2 model via AWS SageMaker, providing an additional layer of control, is achievable through IntelliNode.

IntelliNode Integration

- Import the necessary classes:

const { Chatbot, LLamaSageInput, SupportedChatModels } = require('intellinode');

- With AWS SageMaker, you'll be providing the URL of your API gateway, the steps to deploy your model and get the URL in the Prerequisite section:

const chatbot = new Chatbot(null /*replace with the API key, or null if the API gateway key not used*/,

SupportedChatModels.SAGEMAKER,

{url: process.env.AWS_API_URL /*replace with your API gateway url*/});

- Create the chat input with

LLamaSageInput:

const system = 'You are a helpful assistant.';

const input = new LLamaSageInput(system);

input.addUserMessage('Explain the plot of the Inception movie in one line.');

- Use the

chatbotinstance to send the chat input:

const response = await chatbot.chat(input);

console.log('Chatbot response:' + response);

Prerequisite to Integrate AWS SageMaker and IntelliNode

The steps to leverage AWS SageMaker for hosting the Llama V2 model:

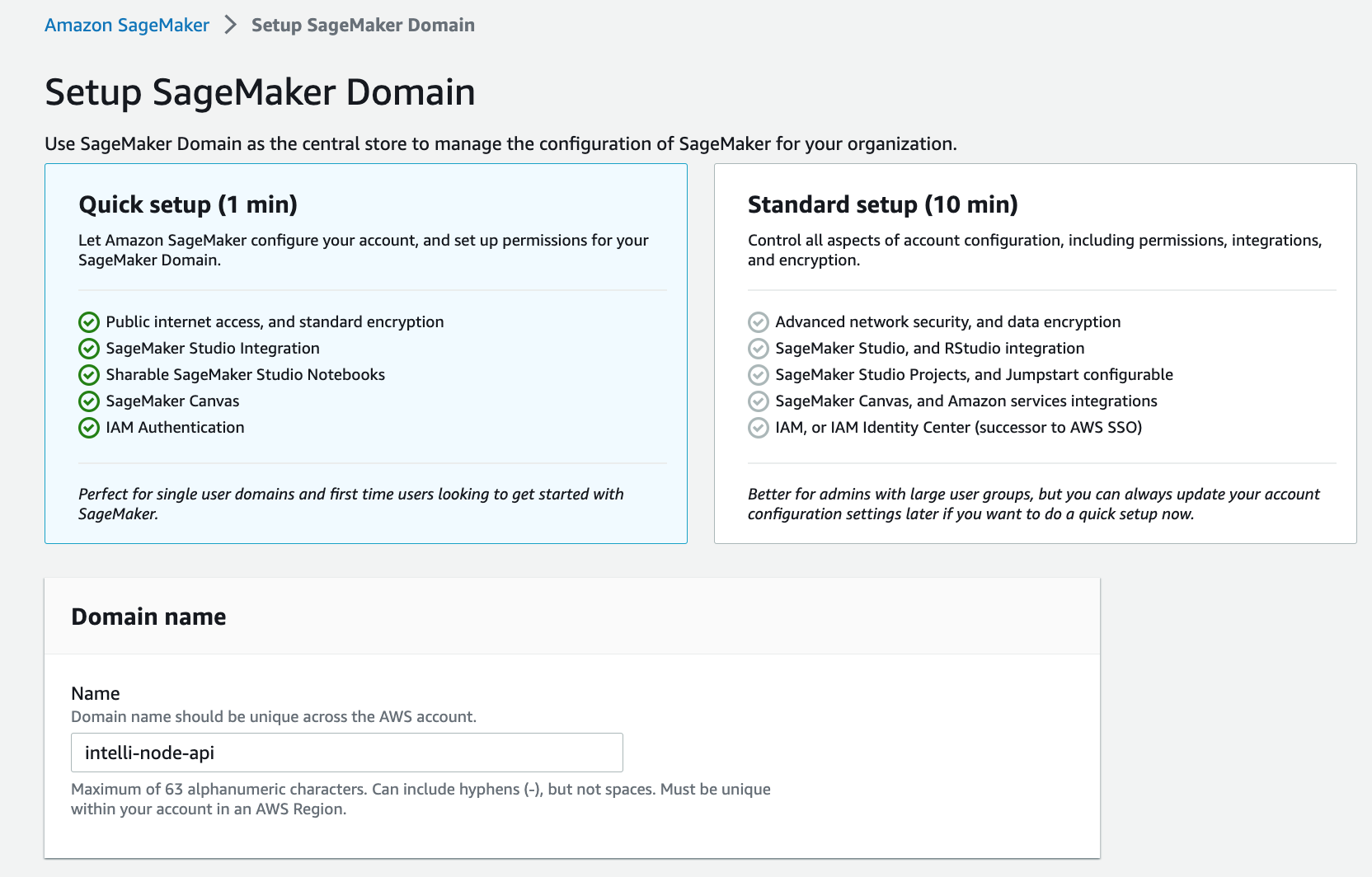

- Create a SageMaker Domain: Begin by setting up a domain on your AWS SageMaker. This step establishes a controlled space for your SageMaker operations.

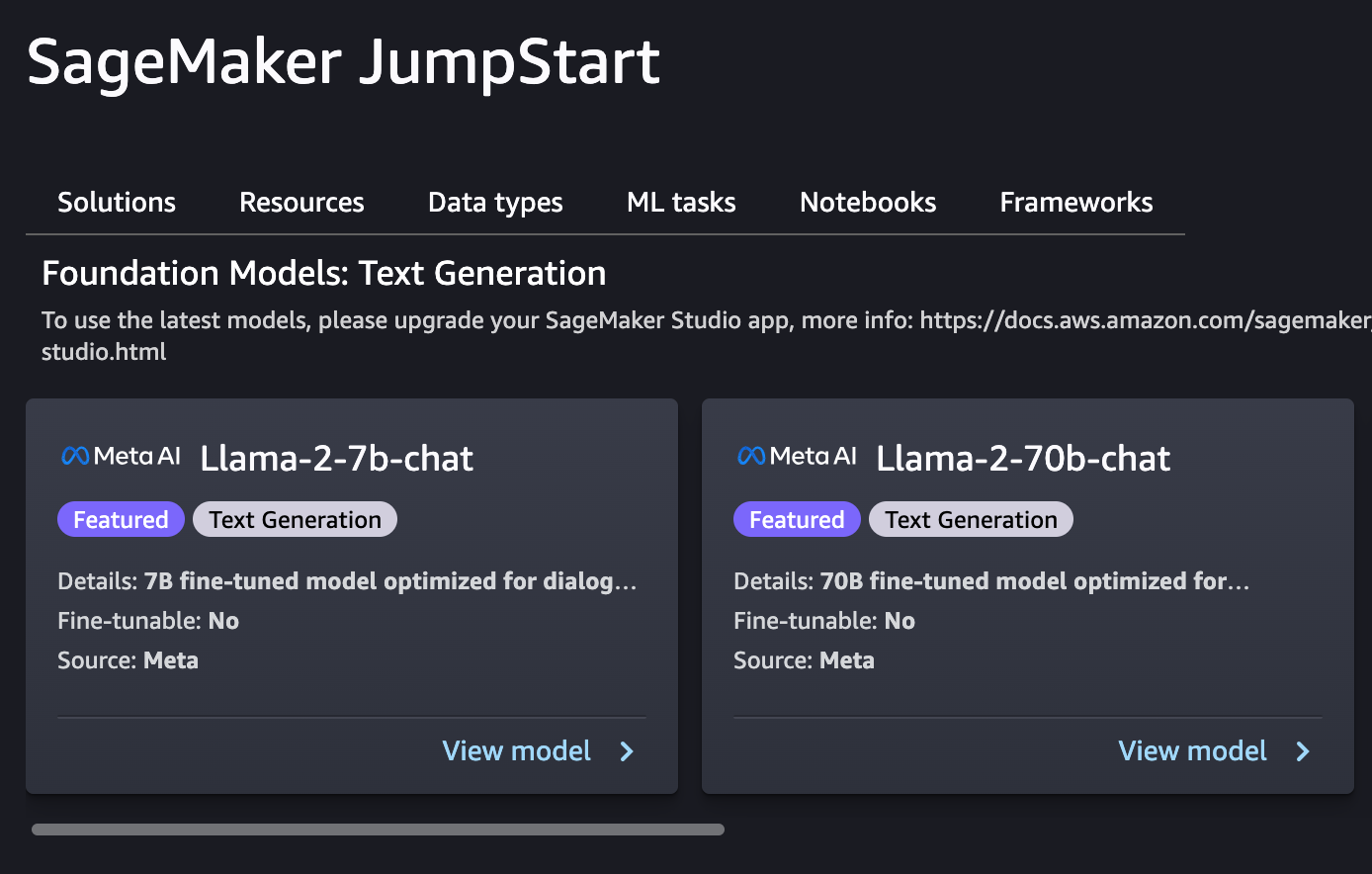

- Deploy the Llama Model: Utilize SageMaker JumpStart to deploy the Llama model you plan to integrate.

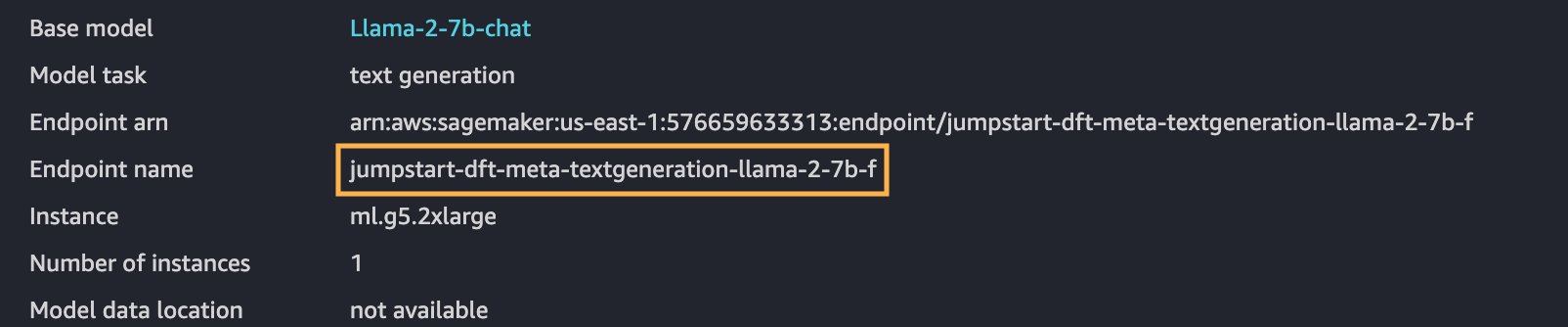

- Copy the Endpoint Name: Once you have a model deployed, make sure to note the endpoint name, which is crucial for future steps.

-

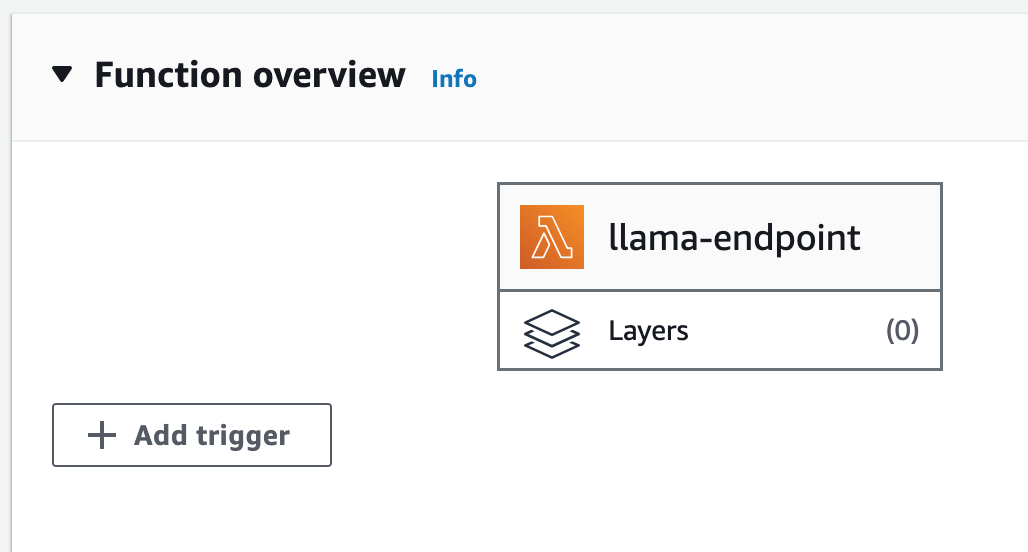

Create a Node.js Lambda Function: AWS Lambda allows running the back-end code without managing servers. Create a Node.js lambda function to use for integrating the deployed model.

-

Set Up Environment Variable: Create an environment variable named

llama_endpointwith the value of the SageMaker endpoint. -

Intellinode Lambda Import: You need to import the prepared Lambda zip file that establishes a connection to your SageMaker Llama deployment. This export is a zip file, and it can be found in the lambda_llama_sagemaker directory.

-

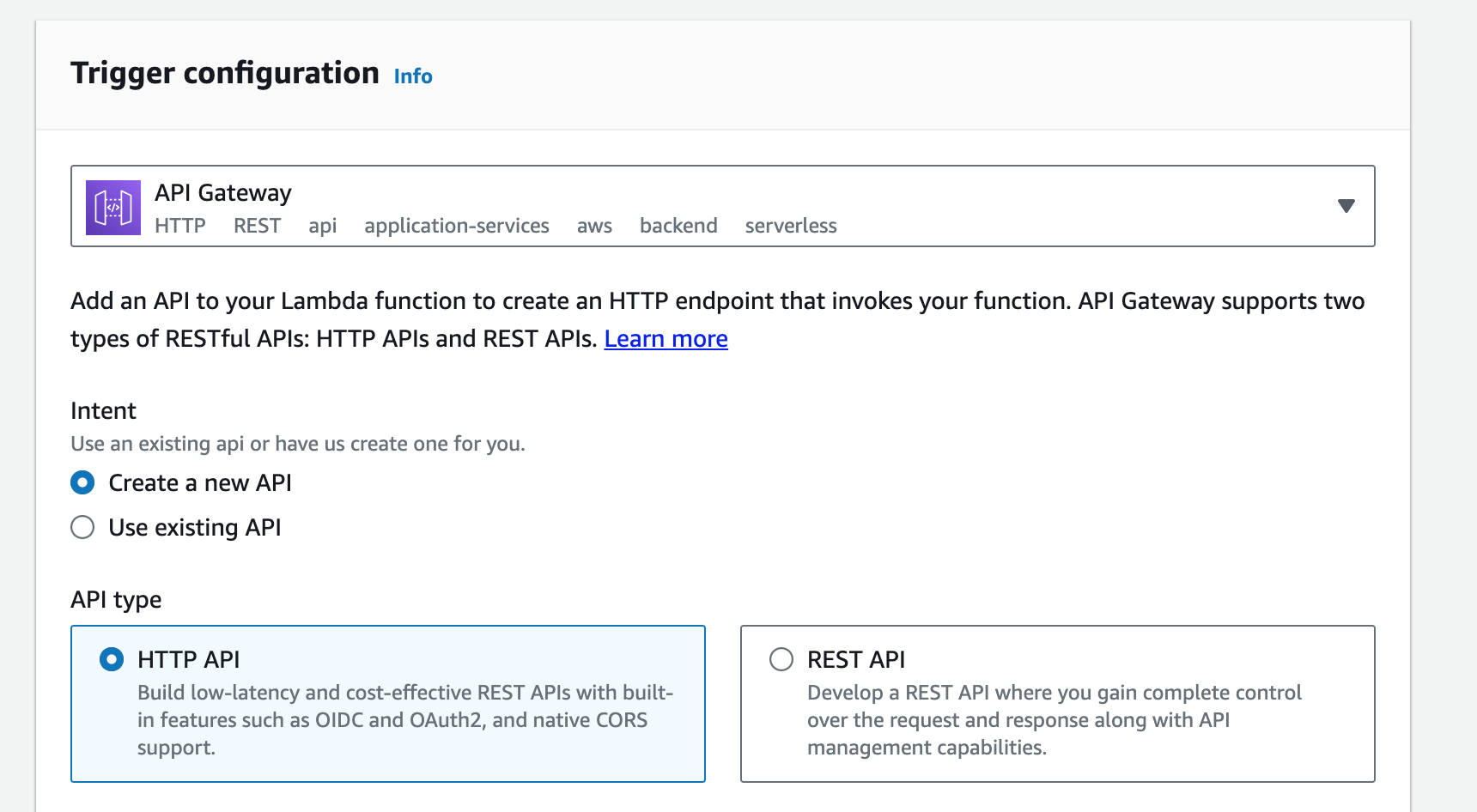

API Gateway Configuration: Click on the "Add trigger" option on the Lambda function page, and select "API Gateway" from the list of available triggers.

- Lambda Function Settings: Update the lambda role to grant necessary permissions to access SageMaker endpoints. Additionally, the function's timeout period should be extended to accommodate the processing time. Make these adjustments in the "Configuration" tab of your Lambda function.

Once you complete these steps, your AWS SageMaker will be ready to host and run the Llama V2 model, and you can easily integrate it with IntelliNode.